In Epiphan’s Support department, we often have conversations with customers about the difference between frame rate and refresh rate. This typically comes up when discussing the compatibility of our products with various video signals, but unfortunately it sometimes causes confusion to customers and Epiphan support staff alike when the terms are used incorrectly or interchangeably. Today I’d like to clear up the mystery and explain the differences between these two similar, but distinct, terms.

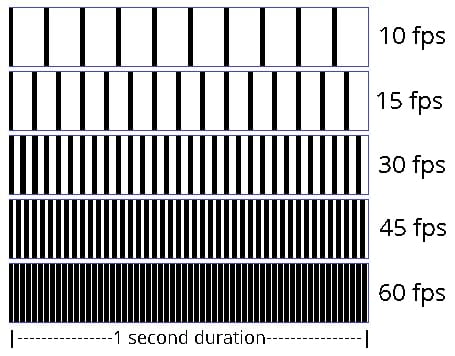

The confusion between the two is understandable – both refresh rate and frame rate refer to the number of times a still frame is shown per second. For example, both a 60 hz signal and a 60 fps video are made up of 60 still images per second.

But refresh rate and frame rate are not actually the same thing! Refresh rate applies to video signals such as HDMI™, VGA, DVI etc, whereas frame rate applies to encoded video recordings, like an AVI file or a livestream out to YouTube.

Refresh rate: the beginnings

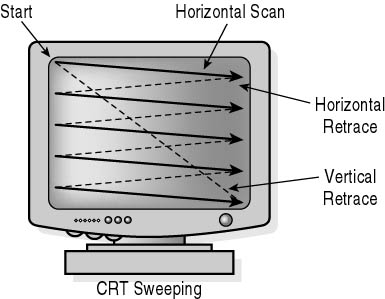

To understand the difference between the two terms, we first need to look at “refresh rate” which originated alongside the cathode ray tube (CRT) computer monitor/television set. A CRT monitor is made up of a glass picture tube with a phosphor screen on one side and an electron gun at the back. The electron gun fires a beam at the screen and lights up the phosphor. This gun moves/scans across the screen line-by-line to create one full image, or frame. The number of times per second this still image is drawn is known as the refresh rate, which is measured in hertz (Hz).

Once the electron gun hits the bottom, it turns off, goes all the way back to the top left corner and starts again. This pause between refreshes is called the “blanking interval”. When you notice your CRT monitor “flickering”, what you’re noticing is actually a visibly low refresh rate, so low in fact that your eyes can now notice the blanking intervals between refreshes. A refresh rate of at least 80 hertz generally produces less flicker.

Liquid crystal display (LCD) displays work on a different principle than CRT monitors. LCDs don’t blank after each image refresh, instead simply reconfigure their liquid structure to display each successive image, emitting a steady brightness regardless of the input signal’s refresh rate.

Refresh rate is important to the story because a specified refresh rate impacts the encoding process of modern-day digital signals. But before I get to that, I’d first like to clarify the detailed distinction in an encoded signal’s frame rate from a raw signal’s refresh rate.

Frame rate: the advantage in encoding

Encoded video is inherently quite different from raw video signals – it never had to take into account any physical real world concerns like electron guns.

Consider encoding a signal without the use of compression. For example, a raw 1080p 60 fps signal would end up having the following total bitrate:

Vertical resolution × horizontal resolution × frames per second × bits per pixel

= 1920 × 1080 × 60 × 24

= 2,985,984,000 bites per second

… or roughly 356 megabytes per second.

This adds up quickly – one minute’s worth of uncompressed video would be almost 21 gigabytes! Obviously this wouldn’t be reasonable for any length of time and certainly wouldn’t be feasible when streaming video out to the internet! With the use of encoding and compression however, that same video would maintain fantastic quality at perhaps 1.5 megabytes per second (~12000 kbps).

To enable such a reduction in data size, encoded video needs to allow for compression and reduced colorspaces. (Encoding hardware or software is used to accomplish this feat, such as Epiphan’s live production mixer, Pearl.) For example, changing the colorspace from RGB24 to YUV420 trims the effective bits per pixel from 24 to 12.

After the encoding process is complete, the resulting data reduces both the size of recordings on your hard drive and the bandwidth required when streaming.

More frames isn’t always better!

When capturing and encoding a raw video signal, the frame rate in the resulting output won’t necessarily match the signal’s initial refresh rate. Epiphan’s capture (and streaming/recording) products, such as AV.io HD, are able to take in almost any standard video signal, but due to the bandwidth limitations of USB 3.0 output cable the resulting encoded signals may have a lower frame rate. For example, our capture cards can receive a 1080p 120hz HDMI signal, but you might not be able to get 120 frames captured from this – a perfectly acceptable 60 fps rate is more likely.

And just how many frames per second is enough? That depends! A higher fps isn’t always better. Just because your signal comes in at 60hz doesn’t mean you need 60 fps for your recording or stream – it depends on the nature of the content.

Movies are an easy example. The typical frame rate for movies is only 24 fps and most Blu-ray movies (the current standard for home-theatre excellence) are presented at this frame rate. Interestingly, the movie industry is currently testing out a frame rate that is much higher. Peter Jackson’s The Hobbit: An Unexpected Journey was the first movie to be filmed at 48 fps, and it was met with mixed reviews by critics and audiences alike.

Many moviegoers claimed the jump from the standard 24 fps to a heightened 48 fps made the footage feel too realistic, ruining the suspension of disbelief. In the case of movies (and therefore also applicable to any action happening at about the same speed as a character moving in a film) this lower 24 fps frame rate is more desirable and is the gold standard we see every day when watching movies on DVD and Blu-ray.

Another example of a desirable low frame rate is seen in the case of media with little-to-no motion, like slides in a presentation. If slides or images are the only form of content present, there is no noticeable difference between encoding at 30 fps and encoding at, say, 10 fps, because the full image changes only once every few minutes or seconds, and the rest of the time each frame is exactly the same as the previous one. When it doesn’t hurt the viewer experience, selecting a lower frame rate can help reduce valuable bandwidth, file size storage and CPU usage.

Higher frames rates, such as 60 fps, are best applicable for visual media that changes at a high rate of speed, where you need to see every little detail with as much precision as possible. Think fast-paced sports like football or an action-packed video game – in both cases, encoding at a higher frame rate ensures viewers never miss a moment.

In conclusion…

So there you have it – frame rate and refresh rate; two terms that both refer to the number of still images displayed per second but are fundamentally distinct from each other. Refresh rate originates from the advent of the CRT monitor and refers to the images per second from raw video signals, while frame rate specifically concerns encoded video signals and can be set to different rates per second depending on the nature of the captured content.

Are there any other technical terms you feel could use a good explanation? Let me know in the comments below and I’d be happy to clear up any confusion.

If you found this post helpful, make sure to check out some of our other similar informative posts concerning the latest AV technology, such as an explanation of how audio is possible over HDMI connections, and a description of extended display identification data (EDID) and why it’s important in capturing video.

Can you guide me to material that explains a frame structure? Example blanking, sync pulse, etc