It’s a common challenge with remote productions: you want to bring in a guest who can contribute a lot to your content, but their feed comes in grainy and full of stutters and stops. The culprit: your guest’s less-than-stellar network conditions. Do you cut them from the program, or live with the drop in quality?

With the Secure Reliable Transport (SRT) protocol, the answer is easy: count them in, and don’t worry about it hurting your quality. SRT will compensate for the shortcomings of their network.

So what is the SRT protocol, exactly? And how can you take advantage of SRT’s game-changing capabilities for streaming and remote video production? Read on for answers to these and other questions about this powerful protocol.

The best SRT encoder for remote contribution

The portable and powerful Pearl Nano is the perfect SRT hardware encoder. Compact and cost-effective to ship, easy to set up, and simple to use, this award-winning device will meet all your SRT needs.

What is the SRT protocol?

SRT is an open-source video transport protocol developed by Haivision. SRT stands for “Secure Reliable Transport,” which reflects two of its major benefits for video and audio streaming: security and reliability.

The goal of SRT is to deliver high-quality, low-latency video streaming over unpredictable public networks, which it does by:

- Offering unparalleled control over video and audio transmission, including the ability to adjust latency, buffering, and other key parameters to suit network conditions

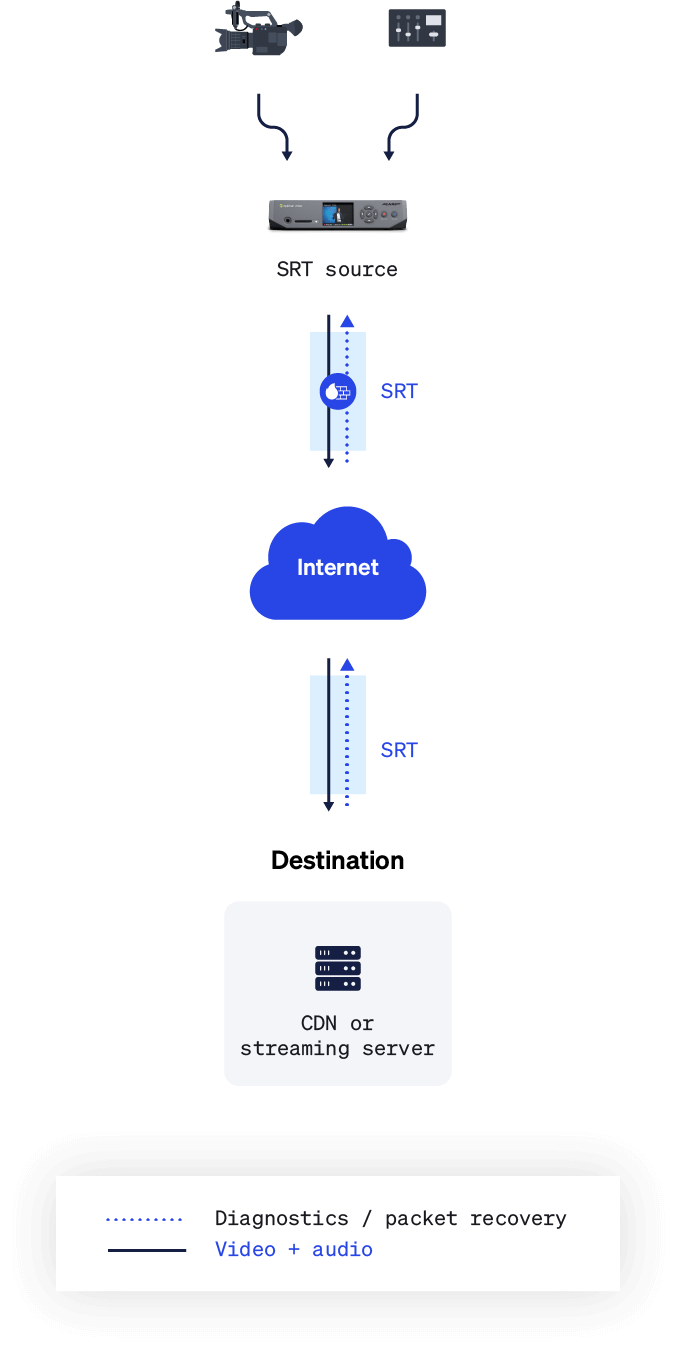

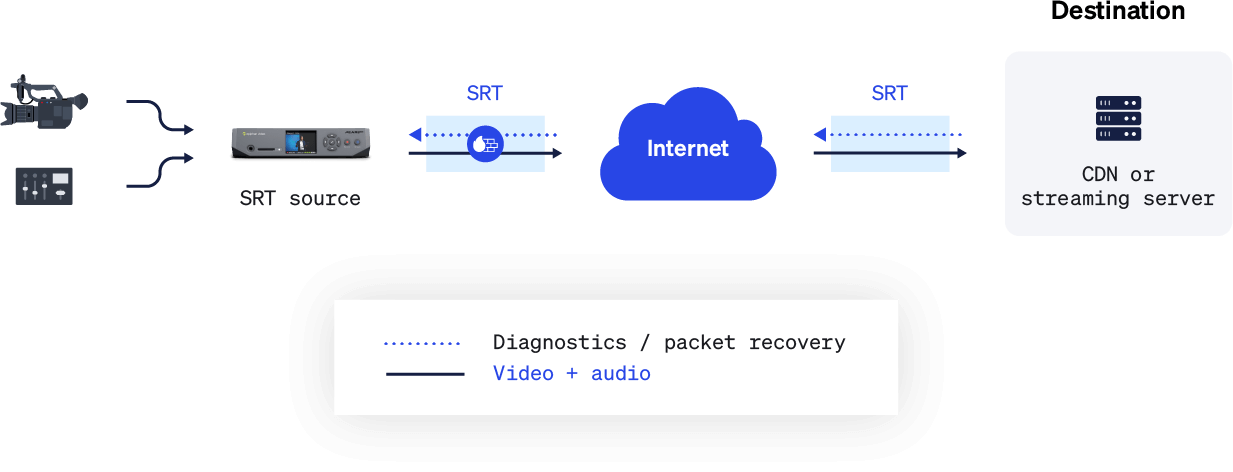

- Incorporating a unique, bi-directional User Datagram Protocol (UDP) stream that continuously sends and receives control data during streaming

- Compensating for packet loss, jitter, and other threats to quality based on UDP stream data

SRT is shaking up not just the realm of Internet streaming but the broadcast world as well. That’s because SRT technology can replace costly (and logistically problematic) satellite trucks and private networks for many remote video applications. Think reports from the field and contributions from guests in another city, country, or continent.

Is SRT better than RTMP?

From a technological standpoint, yes, SRT is superior to the Real-Time Messaging Protocol (RTMP). A lot of it comes down to the fact that SRT is a more modern protocol. Ready for a little history lesson?

RTMP was created way back in the early 2000s primarily as a way to stream video, audio, and data from servers to Macromedia’s Flash player. When Adobe acquired Macromedia in the mid 2010s, along with RTMP, the company repurposed the protocol for broader streaming. Ultimately, RTMP served its purpose. But it’s just not cut out for many modern streaming contexts.

So, what sets SRT apart from RTMP? Built into SRT is a unique, bi-directional UDP stream that continuously sends and receives control data during streaming. Through this, SRT can adapt to fluctuating network conditions to minimize packet loss, jitter, and other threats to quality. This makes the protocol a viable solution for sending video over the worst networks, or unpredictable networks such as the public Internet.

Compare this to RTMP or RTMPS, which send source data to the target streaming server or content delivery network (CDN) without regard for any data that may get lost along the way. Often the result is a suboptimal viewing experience for your audience at the other end.

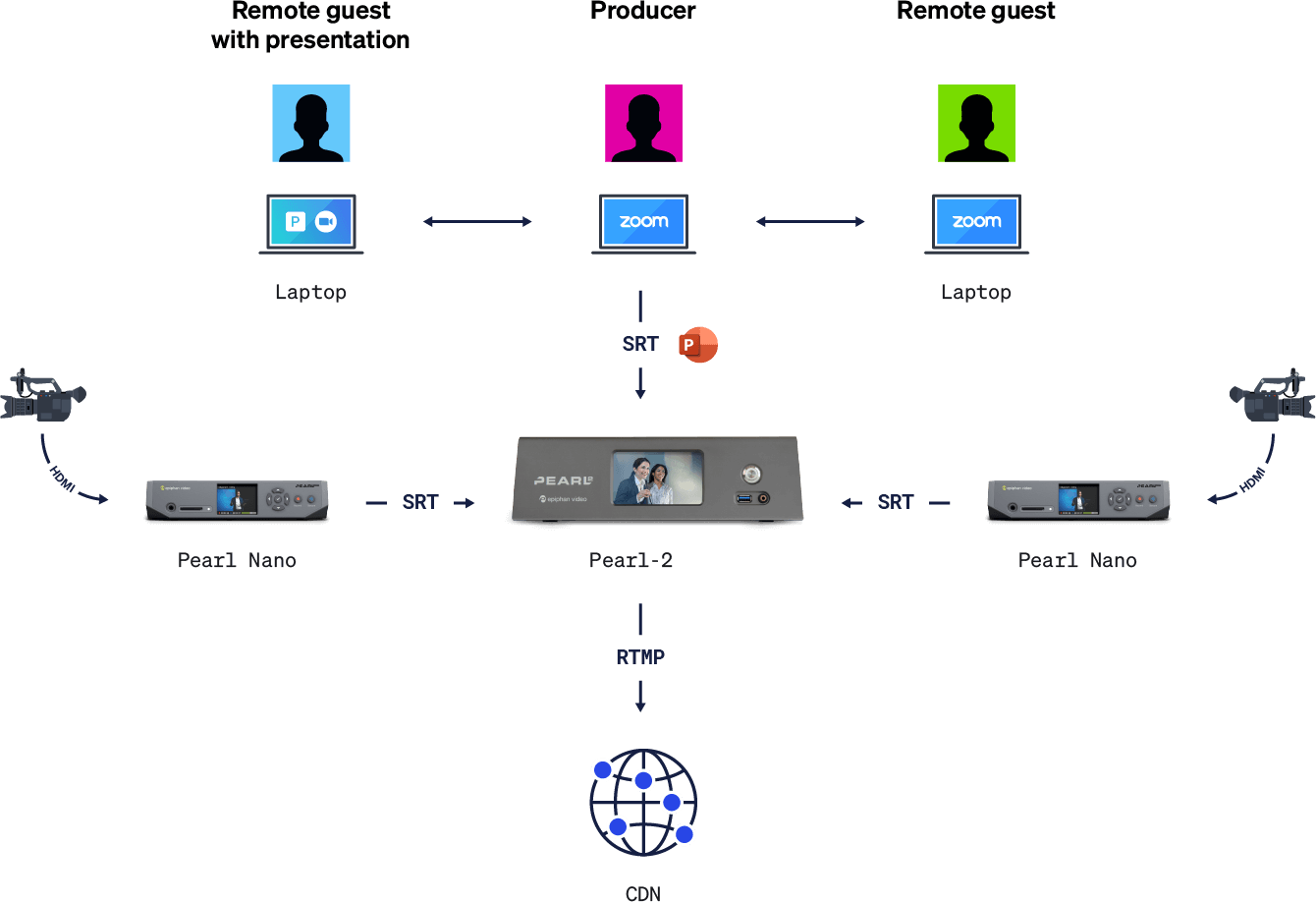

All that said, these protocols can co-exist. For example, you could use SRT to bring remote guest feeds into your production encoder but stream out your program via RTMPS, because that’s what your preferred CDN supports. In any case, your remote guest feeds will look and sound much better with SRT doing the heavy lifting on the contribution side.

Is SRT secure?

SRT is a highly secure streaming protocol. It’s what puts the “S” in “SRT,” after all.

The SRT protocol offers up to 256-bit Advanced Encryption Standard (AES) encryption, safeguarding data from contribution to distribution. And the protocol plays nice with firewalls. Multiple handshaking methods and flexible network address translation (NAT) traversal mean there’s rarely a need to ask a network admin to make policy exceptions, or engage one in the first place.

Who uses SRT protocol?

Since its invention by Havision in the early 2010s, SRT has quickly become the go-to streaming protocol for remote contribution. For example, NASA uses SRT to distribute live video across control rooms for low-latency, real-time monitoring. One of the largest demonstrations of SRT’s potential was the 2020 virtual NFL draft, where producers used SRT to deliver more than 600 live feeds.

Beyond high-profile examples like these, there are the countless content creators that have made SRT a key part of their video production workflows. That includes Epiphan: the SRT protocol is involved in the lion’s share of live show episodes, webinars, and other live productions we do.

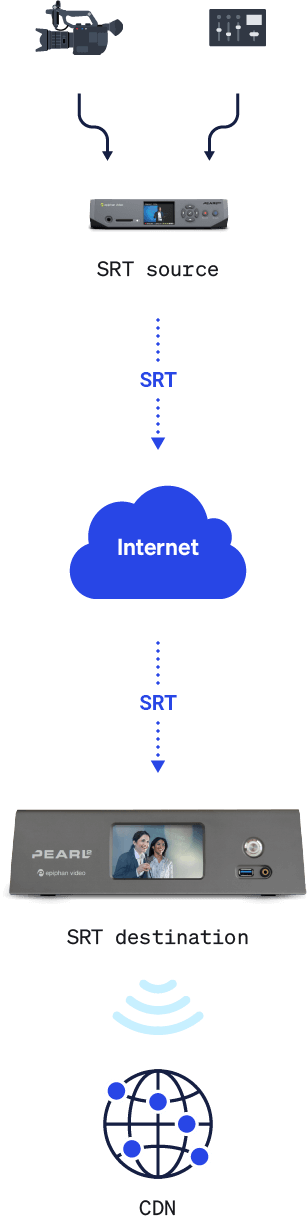

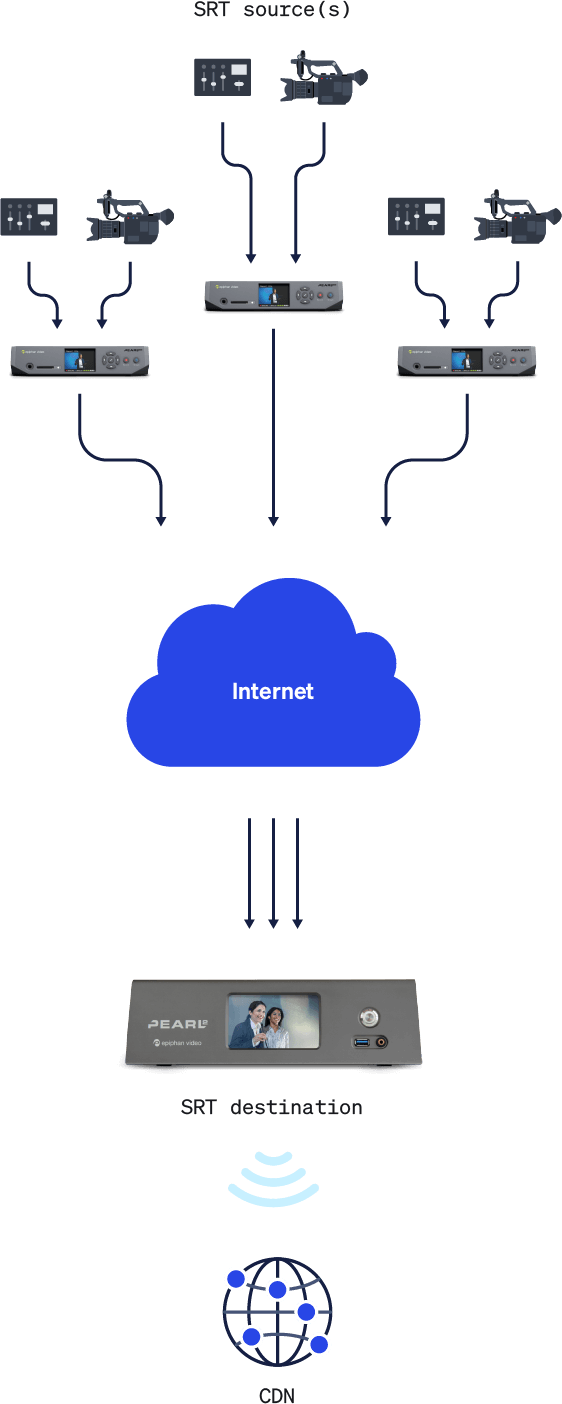

What do you need to use SRT?

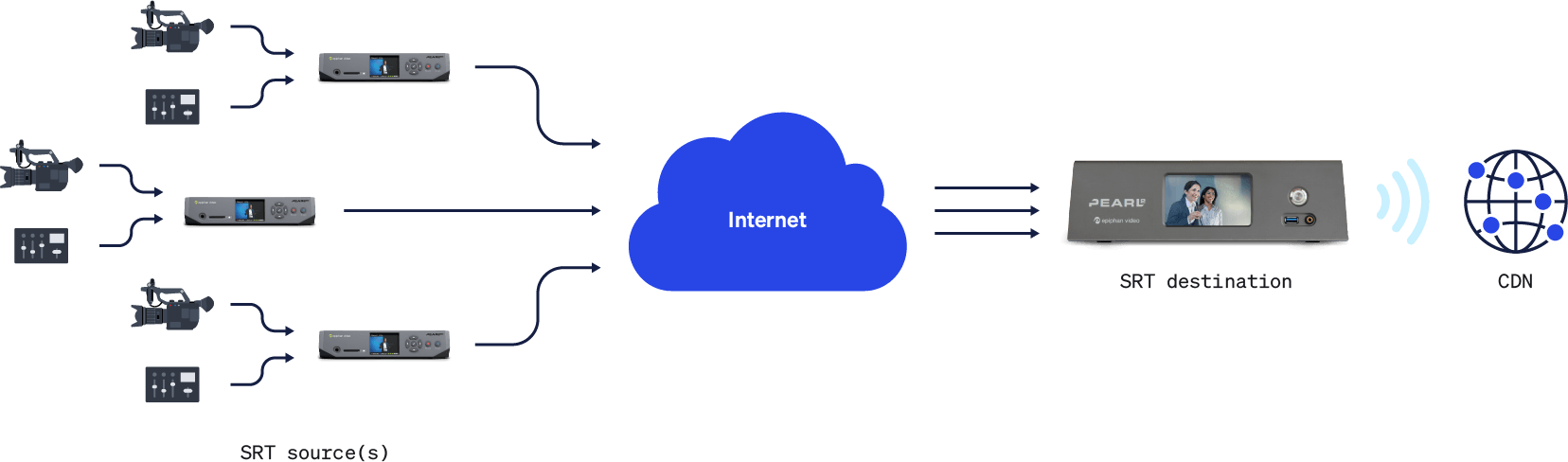

You’ll need solutions that can encode and/or decode the SRT protocol, depending on your application. Any remote guests will need an SRT encoder on their end to send an SRT signal over the Internet to the production encoder, which can then decode the signal and work with it for production.

One remote guest

SRT multiple remote guests

Finding SRT-capable solutions

With SRT being an open-source, royalty-free technology that solves long-standing challenges, companies have been quick to adapt the protocol for use in their own products. These companies make up the SRT Alliance, whose members develop, manufacture, and operate SRT video encoders and decoders, content delivery networks, media gateways, capture cards, cloud infrastructure, and cameras.

This growing ecosystem is one of the biggest benefits of buying in. It means that, if you’re looking to use SRT yourself, there are plenty of options to choose from. For example, you could use dedicated hardware that supports this open-source protocol, like a camera or hardware encoder, or streaming software such as OBS Studio.

As well as a frequent user of SRT, Epiphan is a proud member of the SRT Alliance. We’ve brought SRT encoding and decoding to our award-winning Pearl video production systems. That includes Pearl Nano, the best SRT encoder for remote contribution. Our cloud-based video production platform, Epiphan Unify, can work with SRT signals from anywhere.

Streamline your remote production workflow – in the cloud

Remotely stream, record, switch, and mix broadcast-quality content and live events from anywhere with the cloud-powered Epiphan Unify. Compatible with any encoder or camera that supports SRT.

Establishing a backchannel for real-time communication

Producers need some way to communicate with guests and contributors in real time to ensure they’re set to go live and queue them up for their appearance. A backchannel for communication is also essential when your production involves interaction between remote participants.

Your backchannel for real-time interaction over SRT can be a separate SRT stream or a video conferencing platform. What option makes the most sense depends on the performance of the networks involved as well as the physical distance between sources and the destination (i.e., the round trip time). With high enough network bandwidth and low enough round trip times, it’s possible to use a parallel SRT stream as a communication backchannel. Otherwise, a video conferencing platform can do the trick.

How is SRT latency calculated?

There’s a bit of a learning curve with the SRT protocol, specifically when it comes to the latency tuning mechanic. If you’re wrestling with this side of it, no worries. Here, we’ll break it down step by step.

SRT: Key terms and concepts

- Latency: The maximum of encoder and decoder configured latency. This value specifies how long the decoder will buffer received packets before decoding.

- Round trip time (RTT): How long it takes (in milliseconds) for a single packet to travel from a source to your destination and back. This measure is important when it comes to setting your latency.

- Receive rate: The data upload speed (in megabits per second) from the input’s network.

- Buffer: The number of SRT packets received and waiting to be decoded.

- Packet loss: The percentage of packets lost as reported by the SRT decoder in the last measurement interval.

- Re-sent packets: How many lost packets were sent back to the destination via SRT’s UDP stream.

- Total packets received/lost: A comparison of the number of packets received versus those lost.

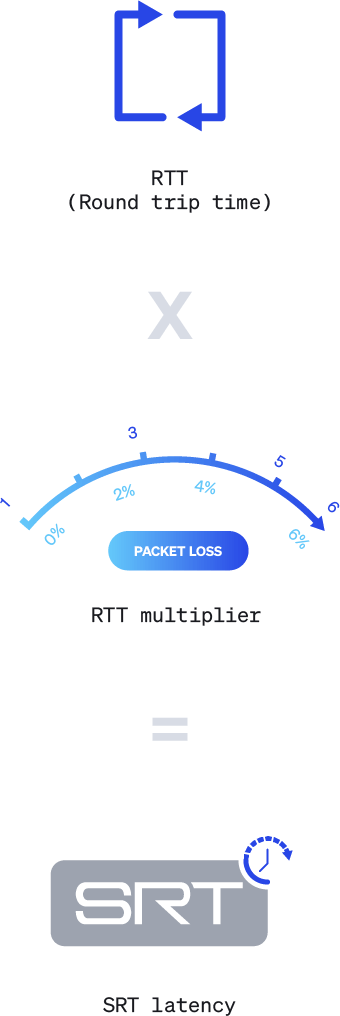

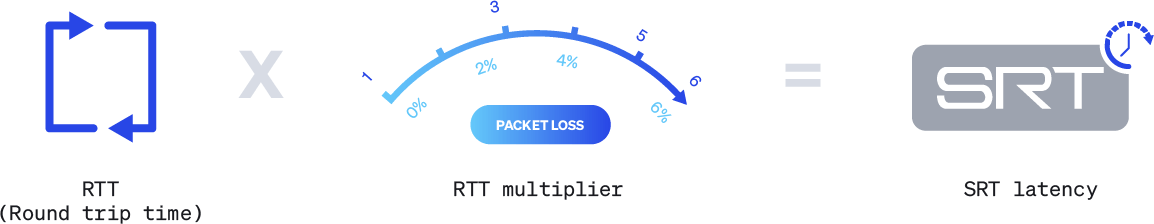

How to calculate SRT latency

For each contributor using the SRT protocol, follow this process:

- Measure your RTT. Your SRT decoder should report this.

- Measure the packet loss rate. Your SRT decoder should report this.

- Measure your network bandwidth. (If you’re using a Pearl system, you can find a network test tool under

- Configuration > Network > Network diagnostics.)

- Determine your RTT multiplier using the table below.

| Worst case loss rate (%) | RTT multiplier | Bandwidth overhead (%) | Minimum SRT latency (for RTT <= 20 ms) |

|---|---|---|---|

| <= 1 | 3 | 33 | 60 |

| <= 3 | 4 | 25 | 80 |

| <= 7 | 5 | 20 | 100 |

| <= 10 | 6 | 17 | 120 |

- Determine your SRT latency using the formula RTT * RTT multiplier.

- Ensure you have enough uplink bandwidth to send the video. For example, if the available uplink bandwidth is 4 Mbps and the stream bitrate is set to 6 Mbps, for example, there’ll be significant packet loss that SRT can’t help.

- Confirm that the send buffer is less than or equal to your SRT latency. When the send buffer approaches the SRT latency, packet drops will occur that SRT can’t recover from.

Is low latency a must?

If the minimum SRT latency value you calculated isn’t essential for your application, we recommend adding extra latency to account for RTT or packet loss growth during streaming.

Master SRT with award-winning solutions

Epiphan Pearl hardware encoders fully support the SRT protocol. Pearl systems feature multiple built-in inputs for video and professional audio, simplifying setup by letting you directly connect advanced video and audio gear for the highest-quality SRT streams. Plus, end-to-end control through Epiphan Cloud makes it possible to configure and test contribution encoders located anywhere in the world. This reduces the chance of errors and simplifies production for everyone involved.

Learn more about SRT protocol support on Pearl systems on our website. And be sure to stay up to date on Epiphan Unify, a new cloud-powered video production platform that will work with any SRT-capable encoder or camera.

SRT streaming on YouTube