Researching how to pick a camera for live streaming? Want to know which type of camera will suit your specific use case? Looking to compare options and understand the differences between pricing categories? Want to know which models other users like? We’ve got answers.

Which cameras are suitable for live streaming?

How do you know a camera is good for live streaming? Can you use the one you already own? There are many features you should consider when choosing a camera for streaming. While some features are nice to have in a streaming camera, there are four that are simply essential.

Four essential criteria for live streaming cameras

1. Clean HDMI out

To live stream from a camera, you have to capture the signal coming directly from its HDMI or SDI out port. Along with the video feed, some cameras will also send all the user interface (UI) elements visible on the display (e.g., battery life, exposure, aperture). To be suitable for live streaming, your camera has to be capable of sending a “clean” signal over HDMI, i.e., a signal without any UI elements visible. Unless it’s clean by default, there should be a menu setting you can toggle.

Pro tip: An easy way to find out whether your camera has a clean HDMI out is to search online: “[your camera model] clean HDMI output”

2. Power supply / AC adapter-ready

Live streams can run for hours. Most internal batteries can only last for about 20 minutes. Make sure there’s an option to get an AC power adapter for your camera (and get it!).

3. Unlimited runtime

For safety and battery conservation reasons, some cameras (especially DSLR models) will automatically shut off after about 30 minutes of inactivity. Automatic shutoff will not be acceptable for longer live streams. Check to see if your camera has this safety feature and whether there’s a way to disable it in settings.

4. No overheating

If you are planning to stream for over an hour, camera overheating may become an issue. Some mirrorless and DSLR cameras can overheat, especially when powered over USB. One way to prevent this is to use something called a dummy battery and an AC power adapter instead of USB power. Even so, some cameras are just more prone to overheating than others. Be sure to research this before buying.

Be it a DSLR, a camcorder, cinema, mirrorless, or any other type, if your camera meets these four criteria, your camera is ready for live streaming. Webcams, on the other hand, are designed specifically for streaming, so it’s safe to assume that most of them come out of the box ready to live stream. It is also safe to assume that all camera models listed in this article comply with these guidelines.

Produce professional live streams from multiple cameras

Versatile Epiphan Pearl all-in-one video production systems make it easy to stream and record with DSLRs, mirrorless cameras, camcorders, and other high-quality equipment to produce live streams that will impress and engage.

Get product detailsOther important aspects to consider

In addition to the four essential criteria, there are a few other aspects to consider.

Output resolution

Today, a camera should be able to output a minimum of 1280×720 (i.e., 720p) resolution. We suggest going for at least 1920×1080 (i.e., 1080p), which most cameras today do offer.

Wondering whether you need 4K streaming? Chances are you don’t. Despite the ever-growing ubiquity of 4K displays, streaming in 4K is still unnecessary in most cases. For one, most viewers watch videos on their mobile devices (for YouTube, it’s over 70 percent of viewers), where even 1080p is more than enough for an enjoyable experience. Another reason is that sending and receiving 4K requires significant resources in terms of both encoding and bandwidth. Essentially, it’s a significant investment for a dubious advantage. Investing in a 4K camera (as well as powerful encoding hardware) is only reasonable when you know viewers will actually watch your live video on 4K displays.

Frame rate

Frame rate is another important aspect to consider, especially if you are planning to stream fast-paced activities like sports. For average-paced activities like interviews, 30 fps is reasonable; however, 60 to 120 fps is recommended for capturing brisk action.

Autofocus

We’ve all seen those videos where the camera just can’t seem to focus on anything. If you are planning to move about in the shot or show a close-up of something, fast and reliable autofocus is extremely important.

Another frequently overlooked aspect is how loud the autofocus is. If there’s a lot of noise coming from the camera focusing, microphones could pick it right up, ruining audio. We suggest researching online what users say about a camera’s autofocus before purchasing.

Audio pathway

Always consider the path of your audio signal. Are you capturing sound with a mic separately, or is it routed through your camera? If it’s the latter, pay attention to the camera’s audio inputs. Basic cameras come with a 3.5-mm jack while more advanced models may offer professional XLR inputs. Some cameras don’t have any external audio inputs at all. Cameras like these rely on an internal microphone, which rarely produces great results.

An advantage of routing audio through your camera is that it eliminates sync issues because the audio and video signals arrive at the same time.

Pro tip: Check to see if your camera has live audio throughput.

Some older camcorder and DSLR models don’t output live audio. A fast way to check this is to connect the camera to a TV using an HDMI or SDI cable. If you can hear the sound on the TV, your camera has live audio throughput, and you’re all set.

Connector type

HDMI (High-Definition Multimedia Interface) is one of the most popular connector types for video. It comes in three varieties: HDMI micro, HDMI mini, and full-size HDMI. Check which one your camera uses and be ready to buy adapters.

Though popular, HDMI connectors are often said to be unreliable, especially the micro and mini varieties. It’s easy to accidentally pull out these cables in the middle of an important event. Additionally, HDMI cables are limited to about 100 ft in length, beyond which the signal starts to degrade.

Another popular connector is SDI (Serial Digital Interface). SDI is a faster connection than HDMI. SDI cable connectors also offer a physical locking mechanism and can run for distances of up to 300 ft.

Simultaneous streaming and recording

It’s a good idea to have a backup recording of your live stream, just in case. Keep in mind that some camera models don’t allow simultaneous internal recording and video capture for streaming. A quick way to determine whether your camera can do both is to connect it to a TV and press record on the camera. If the recording starts and you are still able to see the live feed on the TV screen, your camera is able to stream and record simultaneously.

There is another option when it comes to simultaneous streaming and recording: all-in-one hardware encoders like Pearl-2 and Pearl Mini can both stream and record video at the same time.

Rotating display (flip screen)

You want to be able to see what you look like on camera. Cameras with a screen that rotates to face you and flips the image are designed for this. This handy feature saves the extra effort it takes to set up an external confidence monitor.

Going mobile

If you are planning to use your camera for a mobile live stream, be sure to review the camera’s battery life (and get a few additional battery grips). Also consider the weight, size, and shape of the camera. For example, the design of many DSLR cameras makes it challenging to hold them steady without a tripod for extended periods of time. Camcorders, meanwhile, were designed for handheld shooting.

Other important gear (lights, tripods, etc.)

As amazing as camera technology is today, respecting the basic principles of filmmaking is still crucial. It’s important to pay just as much attention to lighting and composition as to your choice of camera. Even the world’s best camera won’t be able to save a poorly lit shot. Similarly, a sturdy tripod can do more for image stabilization than the best image stabilization software. Check out our guide to building your own live streaming studio to learn the basics of lighting and camera setup.

How to connect a camera for live streaming

It’s a question we hear often: Can I connect my camera to a computer using an HDMI or USB cable and just start streaming?

The short answer is no.

If connecting USB webcams to a computer is pretty straightforward (because they are plug-and-play devices), connecting “real” cameras works a bit differently. First off, more often than not the HDMI port on your computer is an OUT port, not an IN port. Secondly, the small USB port on your camera was designed for slow data transfer and not for the continuous, rapid, high-resolution image transfer required for streaming. In most cases, video capture for live streaming is possible only through HDMI. Lastly, HDMI video capture requires special drivers computers lack.

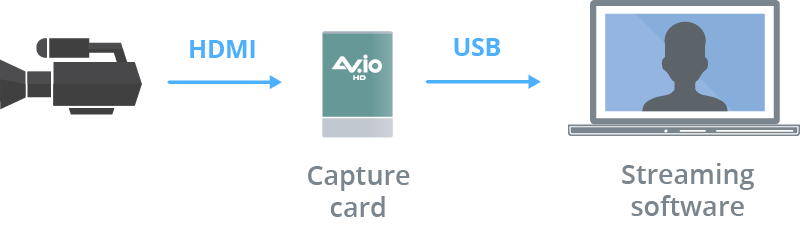

To capture HDMI video from a camcorder, DSLR, mirrorless, or any other non-USB camera, you will need a USB capture card.

A capture card is a small piece of hardware that helps translate the camera’s analog video signal into a digital video format that your computer will understand. Capture cards can come in various flavors depending on resolution (HD, 4K) and connector type (HDMI, SDI, VGA, DVI).

There is, however, a way to live stream from a camera without a capture card. Hardware encoders like Pearl-2, Pearl Mini, and Pearl Nano, are purpose-built devices that can capture, stream, and record any HDMI or SDI camera signal. This way you can connect the camera directly and stream without a capture card or a computer.

Types of live streaming cameras

There are various types of cameras you can use for streaming, including webcams, camcorders, DSLRs, mirrorless, PTZ, and action cameras. For some scenarios, one type of camera is clearly better suited than another. Other times the budget plays the final role, and sometimes it comes down to consumer preference.

Webcams

Webcams are USB-powered devices that connect directly to a computer. The plug-and-play capability makes them highly user friendly. Both computers and hardware encoders can accept webcams as USB video sources.

Webcams were designed for capturing talking heads in indoor settings. Though webcam image quality is almost always inferior to that of “real” video cameras, modern webcams can produce very good video, especially with proper lighting. Advanced webcam features include digital zoom, face recognition, and background replacement. Webcams often come with a clip that mounts onto a laptop or computer monitor, and some even have tripod-ready mounts.

Pro tip: Choose webcams with glass lenses over plastic ones: the video will look crisper and more vibrant.

Logitech c920 webcam

Webcams are good for:

- Indoor video streaming

- Video conferencing/talking heads

- Online video game streaming

- Lecture narration

DSLR and Mirrorless cameras

Originally designed as digital analogs to traditional film cameras, DSLR and mirrorless cameras offer beautiful image quality. The lenses on these types of cameras are usually interchangeable, presenting opportunities for shot customization and fine cinematographic effects. Compared to camcorders in the same price category, DSLR and mirrorless have much larger image sensors, which means better image quality. Switching from a webcam to a DSLR or mirrorless is a great way to improve your stream’s video quality.

DSLR Panasonic Lumix GH5

Because DSLR and mirrorless were primarily made to be photo cameras, some of them are poorly suited for video recording or live streaming. Older models from this category can be particularly prickly when it comes to meeting the four criteria for live streaming cameras:

- Not all models have a clean HDMI out (or any HDMI out at all)

Confirm that the camera has a video out, then check whether it’s a clean HDMI out. Online communities and professional reviews can usually help with this.

- Overheating

DSLR sensors and processors tend to overheat over long periods of operation. Many DSLRs will show an overheating warning after about 30 minutes in live view mode and shut off. This seems to be an issue with many Canon DSLR cameras in particular.

- Automatic shutoff

Some models shut off after a period of inactivity to preserve battery. This issue is usually resolved by either turning the energy saving timer off or connecting the camera to AC power.

Pro tip: Before purchasing a DSLR/mirrorless for live streaming, always check online for any known issues.

With the live streaming industry rapidly evolving, newer DSLR and mirrorless models are designed with live streaming in mind. This means that most recent releases meet the four criteria for live streaming cameras. Additionally, companies like Canon, Nikon, Sony, and Panasonic have recently released firmware updates that convert a DSLR/mirrorless model into plug-and-play USB camera (much like a webcam).

Lenses: An additional expense with DSLR/mirrorless cameras

What sets DSLR and mirrorless apart from other camera types is the interchangeable lens option. This opens up a world of possibilities when it comes to fine-tuning your shot, but the costs for “glass” can also add up fast. Some high-end lenses can cost in the tens of thousand dollars. Depending on the look you are going for, you may end up spending as much (if not more) on the lenses as you did on the camera. This is why lens choice is always something to think about. One way to keep the costs down and help you decide what you really need is to rent the lenses first.

DSLR and mirrorless cameras are good for:

- Vlogging, live shows, webinars

- Upgrading from webcam-quality streaming

- Creating a customized cinematic look using lenses

- Serving double-duty as a photo and video camera

- Travelling, specifically for mirrorless cameras, which are compact and lightweight

Camcorders

Unlike DSLR and mirrorless cameras, camcorders are purpose-built for capturing hours and hours of video. Designed as digital versions of their analogue counterparts, professional camcorders are the industry standard for video broadcasting. Feature sets range widely depending on price point. The bottom line is, if you are looking to produce a lot of live video content, you should strongly consider a camcorder.

Camcorder main features:

- Long battery life

- Subjects are always in focus (flipside: lack of depth of field)

- Easy to use (ergonomic design, point and shoot)

- No recording or streaming time limit (unlike DSLRs)

- Versatility – one lens fits all (flipside: less control over cinematics)

Canon VIXIA HF G21 Camcorder

You can start streaming with the most basic prosumer camcorder like the Canon Vixia R800 for about $250 US, move up to a mid-level camcorder with more functionality and far better image quality for about $1,000 US, and graduate to a professional camcorder ranging anywhere from $2,500 to $10,000.

A small number of high-end camcorder models offer direct-from-the-camera live streaming. This means the camcorder has an encoder unit built in that can stream to any destination via Ethernet, Wi-Fi, or a cellular connection. These include Panasonic, Canon, Sony, and JVC camcorders (all of which are quite an investment).

Digital camcorders are good for:

- Beginner videographers (more affordable models)

- Large live productions like concerts, conferences, live news shows, etc.

- Events where a camera operator is present or can be brought in

PTZ cameras

PTZ (Pan, Tilt, and Zoom) are cameras you can operate remotely. These cameras typically have a flat base that can be securely mounted on a shelf, a ceiling, or a tripod. PTZ cameras are widely used in permanent installs at churches, conference and concert halls, lecture auditoriums, and other large spaces.

These cameras offer ample optical and digital zoom as well as 60 fps streaming, making them a viable solution for sports streaming. One camera operator can remotely control multiple PTZ cameras at once. Some PTZ camera models offer automatic tracking, which means the camera can identify and follow a speaker as they move about the room.

Sony BRC X400 4K PTZ Camera

PTZ cameras generally do not feature audio capture options, which means audio has to be configured and synced separately.

PTZ cameras are good for:

- Church streaming, lecture capture, sports streaming

- Easy mounting and set-and-forget permanent installations in large spaces (churches, lecture halls, concert and conference venues, stadiums)

- Remote operation scenarios

- Automatic subject tracking

Optimal settings for a live streaming camera

To get the best possible video quality, be sure to check the following settings before going live. Some of these settings will be easily adjustable using dials on the camera body, while others may be hidden deep within the system menu.

Coordinate exposure and fps

Set the exposure time fraction to be a multiple of the chosen frame rate. For example, if you are streaming at 30 frames per second (fps), set your camera’s exposure to be 1/30; at 60 fps make it 1/60; etc.

Fully open aperture

The more light the camera receives, the better the image quality, as more light is able to hit the sensor.

Progressive, not interlaced

Video sources that are listed with the letter “p” are called progressive scan signals (e.g., 720p, 1080p), while those listed with “i” are interlaced (480i, 1080i). Progressive signals look better because they display both even and odd scan lines simultaneously. Interlaced signals alternate between even and odd scan lines, making the video look stripey. Always go for “p” for streaming, not “i”.

Deciphering a camera’s streaming resolution and frame rate

In the camera features lists that follow, you’ll see the following sets of numbers and letters:

- 720p60

- 1080p30

- 4K30

What does this mean? These describe the maximum resolution (e.g., 720p, 1080p, 4K) and frame rate (e.g., 30, 60) the camera is capable of streaming.

Best live streaming cameras for any budget

We’ve broken the list down into four budget categories. The idea is to highlight the features you can expect at each price point. The model picks are based on our own experience as well as the opinions of expert streaming community members. Prices are as of November 2020.

1. Minimal budget ($50-250) — Just getting started with streaming

This category includes two types of cameras: webcams and basic camcorders.

|

Logitech C922 Pro HD Stream Webcam ($120)Along with its younger brother, the C920, the Logitech C922 is arguably the most popular webcams on the market today. It remains our go-to recommendation for easy plug-and-play USB video. Features

Drawbacks

|

|

Microsoft Lifecam Studio for Business ($80-100)Another affordable webcam option. We recommend the slightly more expensive Studio Business model over the Cinema model because it comes with 1080p resolution, digital zoom, and a tripod mount. Features

Drawbacks

|

|

Logitech BRIO Webcam ($200)Logitech BRIO offers some of the best video quality you can expect to get from a webcam. High dynamic range guarantees vibrant colors and balanced lights and shadows. The webcam adapts well to any lighting environment, automatically adjusting exposure and contrast to compensate for glare and backlighting. Unfortunately, the 4K feature is only available for recording, not streaming. Features

Drawbacks

|

|

Canon Vixia HF R800 ($250)The Canon Vixia HF R800 is a popular option among those getting started with event streaming. This camera is easy for anyone to operate, handheld or on a tripod. Though not ideal for professional productions, the Canon Vixia HF offers a quick-and-dirty way to set up live streaming for small events, both indoors and outdoors. Features

Drawbacks

|

|

Panasonic HC-V180K ($200)Much like the Canon Vixia R800, the Panasonic HC-V180K offers an easy way to capture video for small events. This is one of the most affordable options when it comes to basic prosumer camcorders. Features

Drawbacks

|

2. Starter budget ($500-700) — Beginners looking for better image quality

If you’re looking to upgrade from the quality you’d get from a webcam or basic camcorder, this category is for you. Alternatively, if you’re just getting started with streaming and want to jump directly to advanced video quality (skipping webcams altogether), this category is also for you. This category predominantly features entry-level mirrorless and DSLR cameras, which offer crisp, professional-looking video. At the same time, the interchangeable lenses offer additional control over framing, depth of field, and blurred background effects.

Compared to high-end DSLRs, more affordable DLSRs may offer less advanced autofocus capabilities. Keep this in mind for situations when you’re filming yourself solo: good autofocus goes a long way for talking-head videos.

|

Sony Alpha a5100 ($500-$600)Ever since its release in 2014, the Sony a5100 has been the top affordable camera pick for many looking to stream. Its small, portable, and lightweight design makes it a perfect travel companion. Overall this is a solid entry-level mirrorless camera choice for live streaming. Features

Drawbacks:

|

|

Canon EOS M200 ($550-$650)This 2019 addition to the Canon mirrorless family quickly became popular with the live streaming community. The M200 is one of the few entry-level mirrorless cameras that offers 4K streaming. This, along with its impressive video quality, makes the M200 a great value. Features:

Drawbacks:

|

|

Canon EOS Rebel SL3 (EOS 250D) ($650)If you’re looking for an affordable 2-in-1 photo and video camera, consider this DSLR. Canon EOS Rebel SL3 (also known as 250D) is the most affordable choice on the market right now. While many DSLR cameras are unsuitable for live streaming due to the automatic safety shutoff feature and the autofocus box display issue, the SL3 doesn’t exhibit either issue. This camera is also capable of streaming in 4K. Features

|

|

Panasonic Lumix G7 ($500)The Panasonic Lumix G7 is a great deal. This Micro Four Thirds mirrorless camera (trapped in a DSLR body) offers great image quality. The external 3.5 mm audio input makes it easy to route professional audio through the camera, avoiding AV sync issues. The G7 is simple to set up and use, and it’s not fussy when it comes to output settings. All these features combined with the price make the G7 a great value camera for live streaming. Features

Drawbacks:

|

|

Panasonic HC-V770 ($600)The Panasonic H-V770 is a mid-level prosumer camcorder. Like all camcorders, it’s easy to operate, on a tripod or off. This particular model offers a few noteworthy features. One is good image stabilization for handheld shooting and fast-paced action. Another feature allows you to pair your smartphone to the camera and use it as a second camera angle for a picture-in-picture layout. Features

Drawbacks:

|

3) Advanced user budget ($900-1600) — Transitioning to professional-quality live streaming

This section is for those looking to transition from basic and mid-level event streaming to professional, polished live productions. This category includes advanced DSLRs, mirrorless cameras, and prosumer camcorders.

Compared to the budget pool below, DSLRs in this category offer larger sensor sizes and better image quality. For DSLR and mirrorless cameras in this category, 4K output virtually comes standard, whereas for camcorders this is not necessarily so. Compared to DSLR/mirrorless cameras, camcorders also come with smaller sensor sizes, though their overall design is better equipped for shooting video. Here, many camcorders will feature professional video and audio inputs, such as SDI and XLR. This is particularly important for professional productions because SDI cables can run longer than HDMI, and they offer a more secure and faster way to transfer video data.

|

Panasonic Lumix GH4 (body+lens starts at $900)One of the most popular DSLR models among social streamers, and for good reason. The Lumix GH4 camera was designed with video in mind: it never overheats during extended periods of work, it doesn’t shut off automatically, and it offers up to 3.5 hours of battery life. For a moderate price, you get a 4K-ready camera in a durable, magnesium alloy, weather-sealed body. Features:

Drawbacks:

|

|

Sony a6400 ($900)The Sony a6400 is one of the cameras we use for our Live @ Epiphan show. A few steps up from the a5100 and an update of the a6300, it’s a great balance between portability, image quality, and value. Sony a6400 is also known for its fantastic autofocus capabilities. Features:

Drawbacks:

|

|

Sony A7 II ($1200)The Sony A7 Mk II is likely the most affordable Full Frame sensor camera on the market. This mirrorless camera feels very solidly built and durable, and it offers undeniably great image quality. The A7 II is a good choice for anyone seeking a well-priced, feature-packed, full-frame camera that can shoot both video and still photography. Due to its below-average battery life and overall heft, this camera may not be ideal for on-the-go shooting. Features:

Drawbacks:

|

|

Canon Vixia HF G50 ($1,100)Canon Vixia HF G50 is the top tier Vixia series prosumer camcorder. It offers a large, highly sensitive image sensor and a lot of control over settings including aperture, brightness, focus, exposure, ISO, white balance, and more. Canon’s advanced optical image stabilization helps correct camera shaking so even handheld video will look sharp and steady. Features:

Drawbacks:

|

|

Canon XA11 ($1,300)A step up from the Vixia series, Canon’s XA series is a step into professional entry-level camcorder territory. The XA11 offers XLR audio input and a shotgun mic mount, which significantly expand quality audio input options. Users commend this camera for its autofocus and face tracking, image stabilization, and long battery life. Features:

Drawbacks:

|

4) Professional budget ($1,600+) — For mission-critical live productions

This category goes into heavy-duty, high-end professional camcorders, advanced DSLR/mirrorless, and professional cinema cameras. You can expect to see full-size HDMI 2.0, SDI, and XLR inputs for professional audio. Other professional features might include LCD/EVF displays that assist shooting (e.g., focus and exposure peaking, zebra, waveform display), built-in ND filters, LUTs, and more.

Many cameras in this category offer advanced remote control and management options, which is something that becomes crucial during professional live productions.

These are refined, professional-grade tools with extensive settings menus, so adequate experience is required to use these cameras proficiently.

It’s difficult to estimate the upper limit for a professional camera budget. Some systems can cost upwards of tens of thousands of dollars. Accordingly, below is but a small selection of popular professional camera models.

|

Panasonic Lumix GH5 ($1600+)A successor of the GH4, the GH5 also carries a Micro Four Thirds sensor and streams in 4K. Beyond that, it offers extended color depth (10-bit color with 4:2:2 chroma subsampling), fast autofocus, great image stabilization, and a few other advanced features. The GH5’s well-balanced affordability and rich feature set makes it a popular camera choice. This is a sturdy, solid-built camera, and according to Panasonic also freeze-proof, dust-proof, and splash-proof. Stream without a capture card: Recently, Panasonic released the LUMIX Tether for Streaming (Beta) software, which lets you connect the camera directly to your computer via USB to start streaming. Features:

Drawbacks:

|

|

Panasonic AG-UX180 ($2,700-$3,700)Among other firsts, this camera is said to have the industry’s widest angle of 24 mm and the world’s first 20x optical zoom in a camcorder with a 1.0-type sensor. Other features include an advanced optical image stabilizer, five-axis hybrid image stabilizer, and intelligent autofocus. Overall, the Panasonic AG-UX180 strikes a good balance between professional features and affordability. Features:

|

|

Canon XF400 ($2,500+)This versatile and compact camcorder offers 4K streaming, remote control, and professional-looking video. Built-in WiFi allows you to control the camera’s functions through a web browser. Camera control is also available using the included WL-D89 remote. Features:

|

|

Panasonic AG-CX350 4K Camcorder ($3,700)Aside from boasting all the standard professional features, this camera also offers unique networking features. The AG-CX350 has built-in streaming, which means you can stream directly from it using standard streaming protocols without a PC or an encoder unit. The camera features an Ethernet port through which you can stream directly to the Internet. An optional USB wireless adapter allows you to stream wirelessly. 4G/LTE connectivity is also possible using third-party dongles. The CX350 has preinstalled support of NDI, which provides automatic discovery and ease of use, and built-in support for NDI via NDI|HX. It also allows ultra-low latency streaming, and supports camera control and tally. Features:

|

|

Canon EOS C70 Cinema Camera ($5,500+)The C70 body may look like a full-frame DSLR camera, but it is in fact a cinema camera. It is said to combine the best of both worlds: the quality and performance of the cine line mixed with the portability, versatility, and ergonomics of the EOS R line. The Super 35 mm Dual Gain Output CMOS sensor can capture an incredibly wide dynamic range, which means a high level of detail in both the highlights and the shadows, a vibrant image, as well as low noise levels even in low-light conditions. Features:

Drawbacks:

|

Conclusion

There you have it: our picks for the best cameras for live streaming for any budget. Naturally, there are many other streaming cameras on the market. This list is based on our own experience and the experiences of our customers.

Did we miss anything? Which camera are you using to go live? Feel free to tell us in the comments which you think is the best camera for live streaming!

In terms of camcorders, which brand is best? Panasonic, Cannon, or Sony? I see that you mention Panasonic and Cannon in your list.