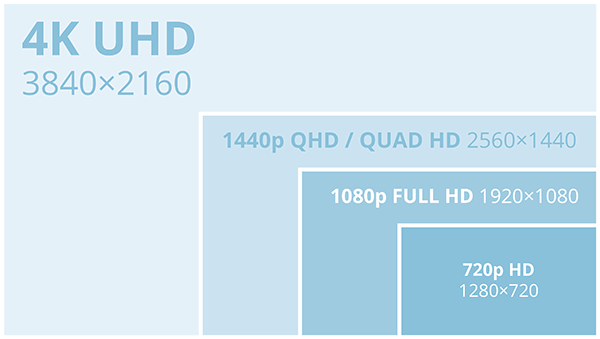

1440p, or QHD, is an extremely high definition resolution in between Full HD and 4K. Its high picture quality makes it popular in laptops and cellphones, but it is not as ubiquitous as 1080p or 4K.

There’s a few reasons for that, and they’re worth exploring. Although televisions considered making 1440p the new standard after 1080p, it was never universally adopted, and the new standard is now 4K. But though 1440p might be the most neglected of the three resolutions, it still has a lot to offer. Let’s explore its common uses and benefits!

Why is it called 1440p?

Those familiar with the nomenclature for resolutions probably know that the number refers to the height, in pixels, of the resolution. So, just as 1920×1080 is shortened to 1080p, 2560×1440 gets shortened to 1440p. The letter after the number, a ‘p’ in this case, refers to how the resolution is drawn on the monitor, indicating if it’s progressive (1440p) or interlaced (1440i).

An interlaced resolution is painted on the screen in alternating frames, with even number frames displaying only even numbered lines, and vice-versa. Switching back and forth between these gives a full picture of the screen to the human eye, but also results in the distinctive “flicker” phenomenon associated with older CRT monitors. By contrast, progressive resolutions constantly paint all lines, giving a much higher quality picture.

Now that you know more about why 1440p is named that way, let’s throw another name for it at you. It’s also called Quad High Definition, or QHD, because it is four times as large as the HD definition, 720p. You might also see 1440p referred to as WQHD, Wide Quad HD, indicating that its aspect ratio is 16:9 as opposed to 4:3. However, don’t confuse it with UWQHD! Ultra-Wide Quad HD actually has a resolution of 3440×1440, and is distinct from 1440p. After you’ve read that paragraph a few times, let’s move on to real world applications.

After you’ve read that paragraph a few times, let’s move on to real world applications.

Where do you usually see 1440p?

The most common places you can find 1440p resolutions is on laptops. It’s one of the most ubiquitous computer gaming resolutions, and the cost of a QHD laptop is reasonable. Recently, gaming consoles have also made a foray into 1440p, with the PS4 Pro and Xbox One S both increasing their compatibility with QHD and 4K. 1440p is also quite common in cellphones, as it provides a tremendous increase to the pixel density on the small screen and improves the definition of the small images.

And of course, you’ll also find it easily in video sources such as cameras. Any 4K camera is also capable of 1440p, and you could even find a small portable 1440p source from GoPro. Just about anywhere you look for 1440p, you can find it.

This means that if you are interested in capturing and recording, there are lots of times when you will need to capture a 1440p source. That’s one of the reasons we’ve made certain AV.io 4K and Pearl-2 are fully capable of working with QHD, capturing and recording with either Pearl-2 admin panel or a software of your choice.

1440p compared to 1080p

There are obviously scenarios where you have no option but to use QHD. But when you have the choice, what are the advantages to the resolution? Why choose it over 1080p and 4K?

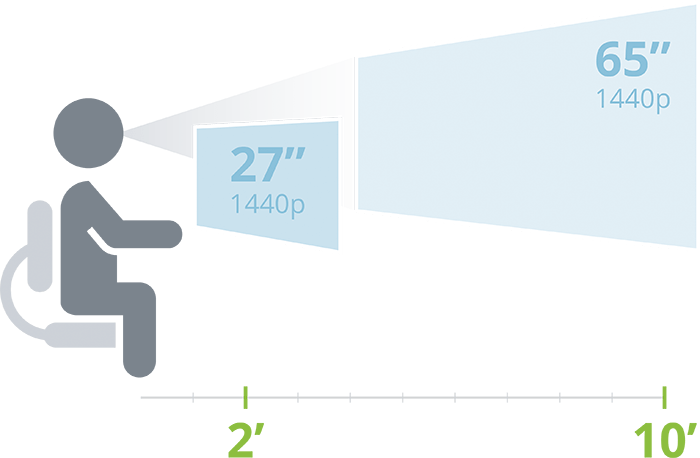

To begin with, if you’re moving from 1080p to 1440p, there’s a huge difference in video picture quality. Whether or not this change is noticeable to you depends on the size of your screen and how close you are to it. For a computer screen a couple of feet away from your face, you can see a difference if your screen is any larger than 27 inches. But for a television screen you watch from a distance, it’s hard to notice much of a difference moving to 1440p unless your screen is larger than 65 inches. But at that range, 1440p is ideal. Making videos look good isn’t the only important difference. 1440p can also be beneficial on screens too small to notice picture quality because of the increased definition it gives to the small images. On cell screens, the icons become clearer, and on computer monitors it gives you a larger workspace. The ability to put more on the screen and still have it more clearly defined than it would be on a Full HD monitor makes it perfect for video and photo editing. It can sometimes cause readability issues as the letters are well-defined but much smaller on the screen, but the fact remains that even on smaller screens 1440p can be beneficial.

Making videos look good isn’t the only important difference. 1440p can also be beneficial on screens too small to notice picture quality because of the increased definition it gives to the small images. On cell screens, the icons become clearer, and on computer monitors it gives you a larger workspace. The ability to put more on the screen and still have it more clearly defined than it would be on a Full HD monitor makes it perfect for video and photo editing. It can sometimes cause readability issues as the letters are well-defined but much smaller on the screen, but the fact remains that even on smaller screens 1440p can be beneficial.

1440p is also commonly used over 1080p in high level gaming, where image quality is almost as important as frames per second. Whether or not you should use it depends on how powerful your gaming set up is. Looking at the need to find a compromise between processing power and video quality brings us to the next obvious comparison…

1440p compared to 2160p

4K has all the advantages over 1080p that come with 1440p, but to an even greater degree. So why not simply use 2160p? The most important questions to ask are whether or not your computer is capable of performing up to your expectations at that resolution, and whether the increased price is worth it.

4K demands a lot from your GPU. If you’re doing something complex like gaming or capturing video, you should research whether your setup is capable enough. With gaming, you need to look especially close at what the increased resolution will do to your frame rate. Most times, if your fps would suffer at all from upgrading to 4K, QHD would be preferable. If you’re capturing video, 4K would require a very recent Pentium i7 processor and a powerful GPU, but you can get by with much less when capturing 1440p. With hardware assistance from a device like AV.io 4K or Pearl-2, you can capture QHD easily.

Then of course, there is cost to consider. It’s not surprising to learn that newer and higher resolution monitors are much more expensive, sometimes twice the price of their 1440p equivalents. So again you have to look at whether the increased definition is worth it. General consensus is that for a computer monitor just a few feet away from your face, you can notice a difference between QHD and 4K if it is any larger than about 32 inches. And of course, with your GPU working hard enough to render 4K video, there is usually a dip in frames per second. Whether or not that is an acceptable loss for the increased resolution again, comes down to a question about personal priorities, and how you value the balance between picture definition and video consistencies. It’s unusual to say this in the technology world, but here, there truly is no wrong answer!

Making 1440p work for you

1440p isn’t the way of the future – that belongs to 4K. But whereas 1080p is getting older and 2160p is on the cutting edge, QHD is perfect for the technology that exists right now. It’s easy to get it working on almost any platform, and your frame rate won’t suffer for it. Thought it might not be as future-proof as 4K, it’s at a prefect Goldilocks medium: not too small for your big screen, not too expensive and hard to work with.

How do you use 1440p?

Monitor makers are lagging behind television makers- goodies like Dolby Vision HD and higher refresh rates are rarer in monitors [makers seem to obsessing on gimmicks like curved screens].

So, with makers like Vizio and TCL leading the charge, i’m becoming interested in using a 4K television as a monitor.

But i don’t really need or want 4K. It’s overkill, and uses much more electricity, a bigger/faster GPU etc. The sweet spot seems to be QHD. Sadly, both sectors [monitor and tv] seem to be going 4K, so the only way i’ll probably be able to get QHD is thru downscaling from 4K.

So my question is [cue drum roll!]: can 4K sets realistically/effectively downscale to QHD rez [without use of 4K-capable GPU’s]???